Radical Empiricism: An Overview

The list of jobs that are likely to be automated within a decade is getting longer. Thanks to GPT-3, writers are starting to sweat. Surprisingly, it appears that “scientific researcher” might soon be on the same list. There's a revolution taking place, referred to as “Radical Empiricism”, that could change the scientific method itself. This is surprising, since science is much more creative, unstructured and complex than, say, driving a car. However, deep learning algorithms can outperform humans in finding solutions to devilishly hard, complex problems, and it turns out science - and biology in particular - has plenty of those to solve.

AlphaGo made the world wake up and say, "Holy shit" at what deep learning is capable of. Since the number of possible board states is so large, it isn’t possible for an algorithm to simply pattern match against previous games, or to compute all possible future games after each move (see Two Minute Papers for more). It achieved something akin to human intuition in determining its next move, revealing new strategies that humans weren’t even aware of, and it handily defeated the world’s best human, all the way back in 2016. Deepmind released AlphaGo Zero, which was even more skilled, and removed even more human knowledge from the equation. Incredibly, it took only the rules of Go as an input and figured out the rest itself.

The historical arc in programming perception-based tasks, from Go bots to image recognition, first involved humans explicitly encoding rules, which became brittle and hard to work with, before moving to machine learned (ML) models, which learn their rules automatically from data. Within ML models, there has been a progression from simpler models (regressions, decision trees, etc.) to deep learning, which can create more complex models. This approach is successful enough to achieve superhuman performance across a wide variety of tasks. We don't know exactly how they’re making these inferences, but the results speak for themselves.

We can imagine a similar arc unfolding in the way we do science. Today, we start with a question, gather evidence, and if all goes well we can develop a theory or explanation that can drive future investigation and experiments. This is done in a highly bespoke way, and the creation of the theory relies on highly trained experts. When ML enters the picture, we still need experimental evidence, but we hand this data to an algorithm to figure out the rest. We don’t get a tangible theory, but we do get the ability to use this knowledge to make new predictions. And interestingly, the algorithm generally doesn’t “stand on the shoulders of giants” by incorporating past scientific laws, it forges its own path from the raw data instead. In this world, our task is to simply provide the algorithm with the right information such that it can learn the inference we need. This has been described by Vijay Pande from a16z as Industrialized Discovery, since its output relies on repeatable processes that can scale and improve over time, rather than relying on the lone artisan (or scientist, in this case) continuing to create new game-changing outputs.

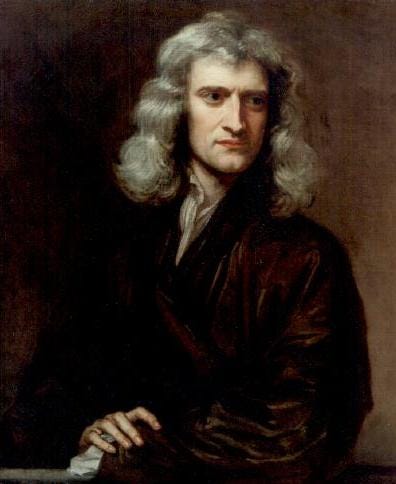

A classic scientific artisan, Isaac Newton. If only we could horizontally scale his brain, and refine his skills 1% per year in the centuries hence.

What's happening in that magic ML black box? On one extreme we can create an “over-fit” model, in which it essentially memorizes the mapping from inputs to outputs and utterly fails on new data it hasn't seen before. This is not what we want. On the other extreme we might create a model that is truly general, perhaps even universal, and will make perfect predictions in the future. An image classification algorithm will always be able to identify a cat in new images when it has fully absorbed the essence of "catness". If the algorithm was predicting trajectories of objects, it would accurately predict all future trajectories when it discovers the kinematics equations of physics. Where on this spectrum a model lies can be assessed by its performance on new, unseen data, but unfortunately we can’t directly inspect the algorithms to extract these new, potentially beautiful rules of inference. Determining why a deep learning model made a certain decision is an area of active research, but in the near future we’ll have to live with this reality.

Representation of a cat-recognizing neuron, from Google.

One of the most amazing things about science is that natural phenomena are generally governed by simple mathematical rules and principles. Why should it be true that a thrown rock falls in a parabola? It could just as easily follow a trajectory from a random number generator, but in this universe, nature tends to admire simplicity and respect mathematics, and the models are out there, waiting to be discovered.

One of the most beautiful things about science is that natural phenomena are generally governed by simple mathematical rules and principles.

From Dark Matter in physics to protein folding in biology, there's no shortage of unsolved problems that continue to resist our best efforts to model and understand. And we should again consider two extremes. One is that the underlying law is extremely simple, but we simply don't have the right experiment or underlying understanding to discover it. The other is that the underlying principle is highly complex, perhaps too complex for humans to even fathom. If the latter is true we're in trouble, as it means we could continue with our current approach forever and never discover it. Naturally, humans can only discover models that are simple enough for us to discover. Nature tends towards this level of simplicity, but there's no guarantee that's always going to be the case.

Pragmatically, even if an algorithm doesn't discover new universal laws, its "knowledge" can be sufficiently robust and general to make great predictions, even if the underlying model is highly complex. Humans aren't very good at being protein engineers, even after decades of experience, but algorithms are showing increasing promise. Biology generally has very high complexity, and it is ripe with opportunities for algorithms to build an understanding of this complexity, and make suggestions for how to modify the system in a desirable way.

If we want to coax these deep learning models to tell us nature’s secrets, some conditions must be met:

The inputs and outputs to the algorithm are well structured, and provide a clear idea of what "good" looks like. For example, the input can be the molecular structure of a new antibiotic, and the output whether the bacteria died or not when exposed. Structuring and digitizing biology is hard but possible with careful assay selection.

There is an immense amount of this data, wherein there is enough implicit information for the algorithm to build a robust model. Biology works pretty well in automated, high-throughput labs, so it's possible to run billions or even trillions of samples in a matter of days or weeks.

Once these are satisfied, the real challenge is to ask the right question. You're exploring massive search spaces, and it's in your best interest to constrain it as much as possible. It's better to ask "What amino acid sequence will produce a protein that will bind with this target?" than "What drug will cure all types of cancer?". Then, you figure out how to automatically perturb your cells and know whether the change helped or hindered. If you're getting really fancy, you'll have the algorithm recursively explore parts of the search space, proposing the next round of perturbations to test. And then you converge on... something valuable enough to justify this expensive endeavor in the first place. That likely means a drug, but there are plenty of other molecules across biology and materials science that could qualify as well.

This is the essential formula being applied by Recursion Pharma, Insitro, LabGenius, and many others, and they're showing some promising early results. This technology could radically increase the speed and decrease the cost of R&D for new drugs. This could mean treatments for the currently untreatable, cures for the currently incurable, and lower costs for those receiving the medicines. But it's still very early, and it remains to be shown if this approach will bear fruit.

One thing is certain: it's a very exciting time to be a nerd in biology and ML.

Check out this podcast episode from The Economist or this interview with Zavain Dar and Nan Li for more.